News Story

Internal predictive model characterizes brain's neural activity during listening and imagining music

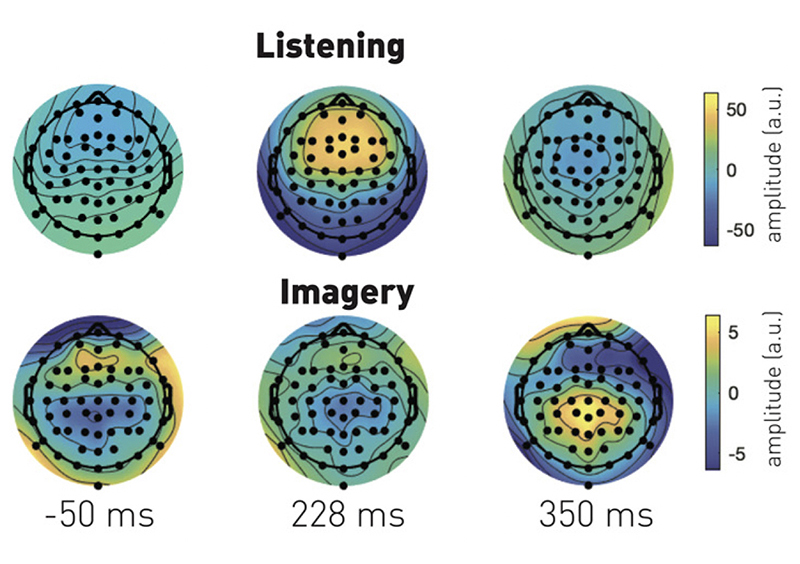

ERP Topographies. Participant-averaged topographic distributions from the ERP of all notes at least 500 ms away from the metronome. (Fig. 7C from Music of Silence Part 1.)

You’ve just attended an exciting concert by your favorite musical group. Afterwards, in the car, after arriving at home, and over the next few days, you catch yourself replaying your favorite songs in your head. You “hear” the music in your brain—the melodies, the fast and slow parts, even the places where the songs dramatically pause before starting up again.

The term for this is “musical imagery”—the voluntary internal hearing of music in the mind without the need for physical action or external stimulation.

Recently, scientists have begun to explore what happens in our brains when we imagine music.

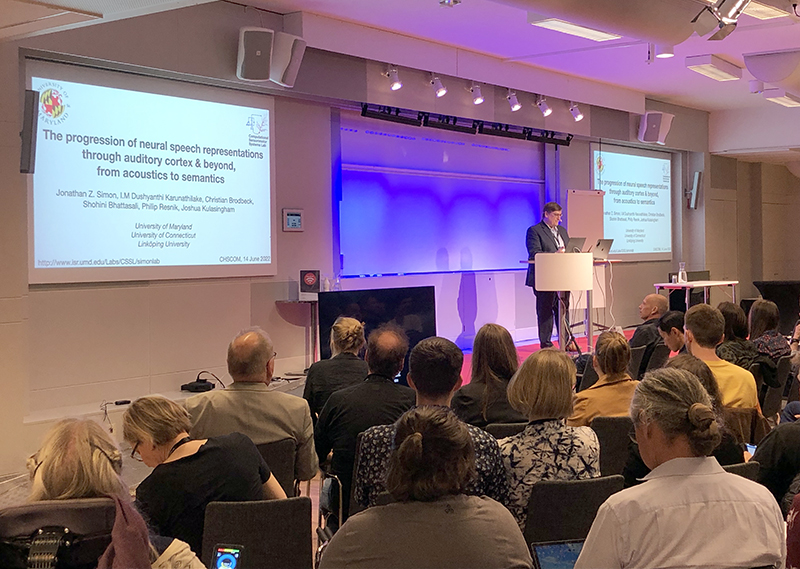

Two new papers on “the music of silence,” by Professor Shihab Shamma (ECE/ISR) and his colleagues in the Laboratoire des Systèmes Perceptifs at the École normale supérieure, PSL University in Paris, were published this fall in the Journal of Neuroscience. The papers were written by Shamma, his postdoctoral researcher Giovanni M. Di Liberto, and his Ph.D. student Guilhem Marion.

Neuroscientists know which parts of the brain are activated when we experience musical imagery. However, they do not yet know the extent to which imagined music responses preserve elements that occur during actual listening to music. For example, does imagined music contain detailed temporal dynamics? Do our melodic expectations play a role in modulating responses to imagined music, the way they do when we hear music played? And does our brain actively predict upcoming music notes, including silent pauses, when imagining music?

The first paper

In The Music of Silence Part 1: Responses to Musical Imagery Encode Melodic Expectations and Acoustics, the researchers examined whether melodic expectations play a role in modulating responses to imagined music, as they prominently do during listening. Modulated responses reflect aspects of the human musical experience, such as its acquisition, engagement, and enjoyment.

The study was based on electroencephalography (EEG) recordings of 21 professional musicians who listened to and imagined Bach chorales. Regression analyses were conducted to demonstrate that neural signals produced by musical imagery can be predicted accurately, similarly to actual listening, and that they were sufficiently robust to allow for accurate identification of the imagined musical piece from the EEG.

The results suggest that imagining naturalistic melodies elicits cortical responses with temporal dynamics and expectation modulations that compare to neural responses recorded during actual music listening. The neural signal recorded in the “imagery” condition could be used to robustly identify the imagined melody (and its imagined notes) with a single-trial classifier. These responses exhibited detailed temporal dynamics that reflected the effects of melodic expectations.

The responses shared substantial characteristics across the individual participants, and were also strong and detailed enough to be robustly and specifically associated with the musical pieces that the participants listened to or imagined.

The study demonstrates for the first time that melodic expectation mechanisms are as faithfully encoded when one imagines music are they are during actual musical listening. This finding hints at the nature and role of musical expectation in setting the grammatical markers of our perception. Music is an elaborate symbolic system with complex “grammatical” rules that are conveyed via acoustic signals. Just as in speech, expectation mechanisms are used to parse musical phrases and extract grammatical features to be used later in other purposes.

It is likely that imagery induces the same emotions and pleasure felt during musical listening because melodic expectations are encoded similarly in both cases. This explains why musical imagery is a versatile place for music creation and plays a significant role in music education.

The systems perspective

From a systems perspective, auditory imagery responses can be thought of as “predictive” responses, induced by top-down processes that normally model how an incoming stimulus is perceived in the brain, or the perceptual equivalent triggered by the motor system. They should be fully considered as top-down predictive signals. Both studies in this series point to their inverted polarity relative to listening responses. Such an inversion facilitates the comparison between bottom-up sensory activation and its top-down prediction by generating the “error” signal, long postulated in predictive coding theories to be the critical information that is propagated deep into the brain.

The second paper

In the second paper, The Music of Silence Part 2: Music Listening Induces Imagery Responses, the researchers studied the brain’s ability to learn and detect melodies by predicting upcoming music notes, a process that causes instantaneous neural responses as the music confronts our expectations. Predictive processing explains rhythmic and melodic perception as the continuous attempt of our brain to anticipate the timing and identity of upcoming music events.

To investigate the neural encoding of silences during music, Shamma, Di Liberto and Marion again used EEG. They recorded the brain activity of professional musicians as they listened to and imagined Bach melodies and contrasted the brain’s neural signals when the subjects listened to a music stream’s frequent silent pauses with its activity when the subjects were asked to imagine the same melody. This allowed the researchers to investigate neural components the brain generates on its own when listening is not involved.

The research demonstrates that robust neural activity consistent with prediction error signals emerges during the meaningful silences of music and that such neural activity is modulated by the strength of the music expectations. Similar neural signals emerged in the case of both “listened-to” and imagined notes and silent events, and internally generated music of silence is in line with the brain’s continuous attempt to predict upcoming plausible notes.

The study provides direct evidence that in both listening and auditory imagery the brain uses an internal predictive model to encode our conceptions and expectations of music, which we can then compare with the actual sensory stimulus, if it is present. The results link neural activation for auditory imagery to this general predictive mechanism that applies to both listening and imagery. This unifying perspective on auditory predictions has implications for computational models of sensory perception.

Published October 14, 2021