Learning Age and Gender Adaptive Gait Motor Control-based Emotion Using Deep Neural Networks and Affective Modeling

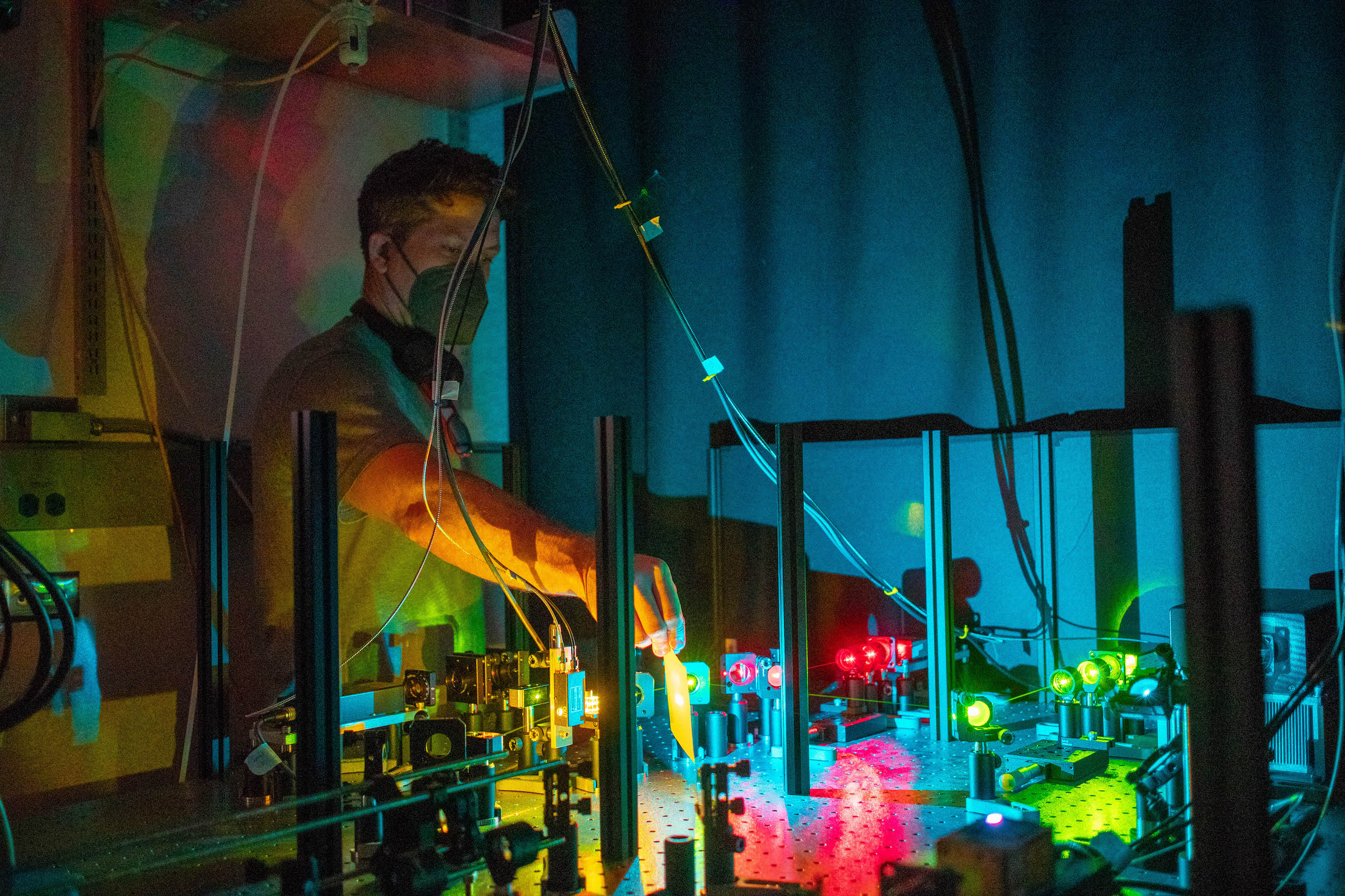

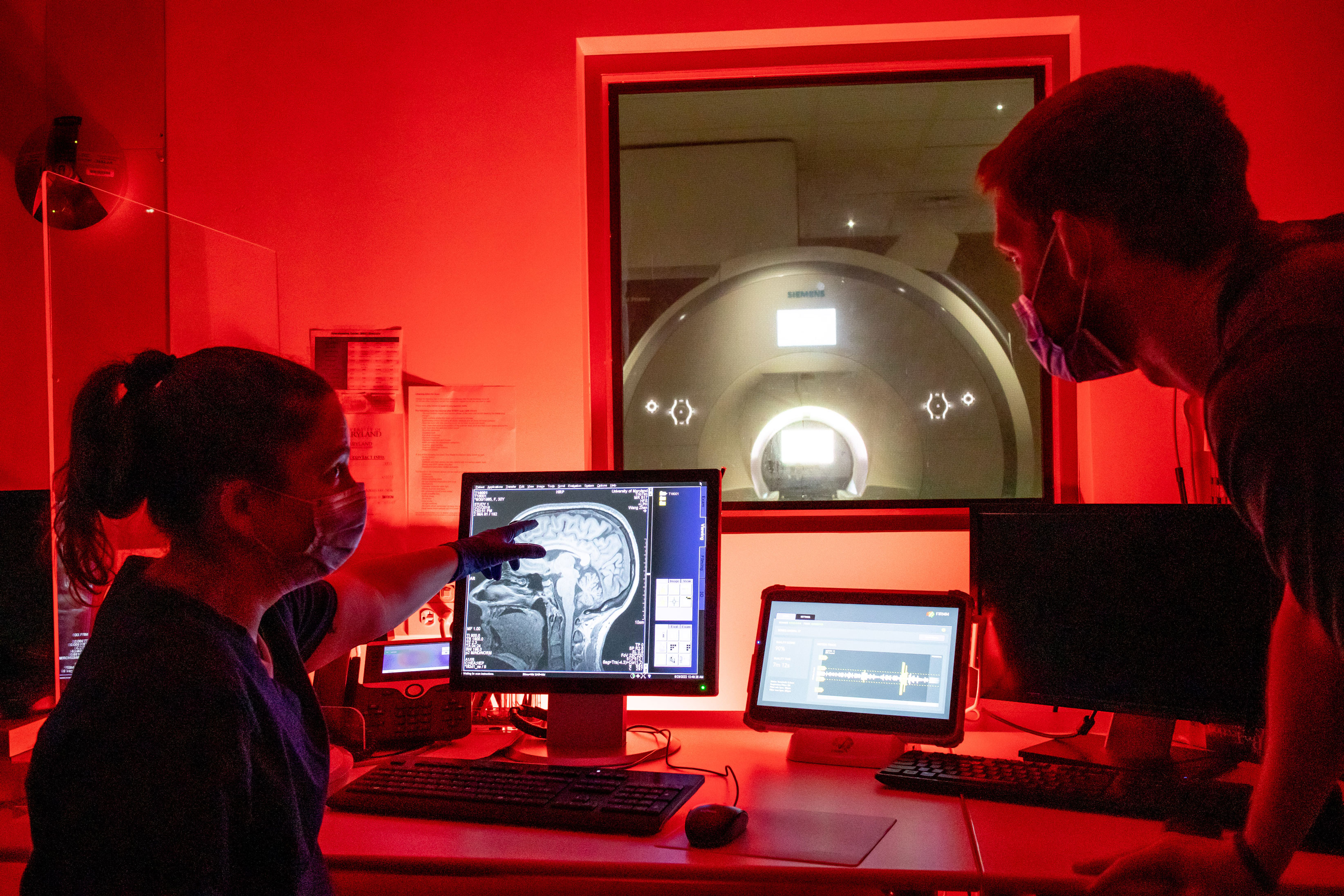

Detecting and classifying human emotion is one of the most challenging problems at the confluence of psychology, affective computing, kinesiology, and data science. This research examines the role of age and gender on gait-based emotion recognition using an autoencoder-based semi-supervised deep learning algorithm to learn perceived human emotions from walking style.

This BBI seed grant generated Project Dost, an AI-driven virtual humanoid agent designed to have conversations and behave like real humans.